Let’s say I want to build a financial market prediction model based on news flow. The current way to do this is:

- Collect lot of news articles

- Collect financial market movement data

- Use machine learning models (black-box or otherwise) to predict markets from news

This is not a scalable model of operation for building AI/ML solutions. This will require every problem to be solved from first principles. This approach requires that any ML solution use millions of data points to solve problems. In a way companies are doing exactly this when they build autonomous car driving solutions from pixel data or streaming data from sensors. Humans don’t learn this way. And this approach will not be robust. For example a small change in rules of the game will require millions of training sets again.

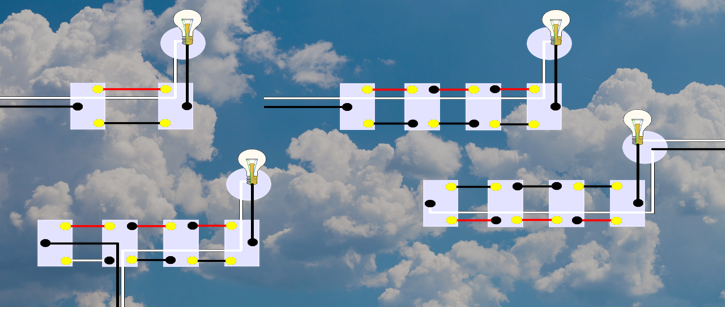

Psychologists believe that humans are intelligent because of a combination of nature (genetic evolution over time) and nurture (learning over a life time). The above approach to AI puts all the burden on nurture without any pre-built circuitry or parameters. I believe this will not solve problems robustly. Instead I propose on a more collaborative approach of working with “primitives”. For example, going back to financial market prediction from news, the solution can be built using different layers.

I’ve segregated the solution as three layers and each layer delivered as a different platform. I’ve chosen the word platform with a specific intent in mind, which will hopefully get clear by the end of this article. All platforms can be built by the same entity (let’s say G-Square), but it will not make sense. A platform like Google will have access to much larger textual data and can build vector representations more efficiently. These representations can evolve continuously over time as language itself evolves, just like human genes evolve and form the basis of further nurture. The vector representations themselves can be used for a variety of purposes and not just financial markets.

Vector representations of words could be just one of the several primitives necessary for building general AI. The other primitives I can think of are edge detection in images, object identification etc. But unlike vector representations there is no clear cut/well defined schema for representing other types of knowledge. Overtime there could be some standardisation.

Which brings me to the next idea. The primitives for AI will have to reside on the cloud to be able to be accessed by any subsequent algorithms. Thus the nature of AI will be very different from human intelligence. Humans have a very individualistic intelligence. Although human intelligence improves over time by learning from predecessors, much of learning happens during a life time and ends with that. There is no shared ML algorithm across all humanity, at least there isn’t an explicit one. The AI I envisage will be a connected AI residing on the cloud and not on individual machines or even platforms. That is the reason I prefer to call the primitive provider a platform. A collection of cloud based AI primitives can be kludged together by engineers to form applications. There could be a limited portion of learning (especially the last mile) residing on a single machine or a standalone platform. The power of cloud is in the fact that several platforms can collaborate, compete and evolve much faster than how biological entities evolved.

Follow