Experiential Learning: Neural Networks in Sales Modelling

Background

In our regular sales modeling for clients, we primarily relied on traditional algorithms such as random forest and XGBoost models due to the tabular nature of our data and the success we achieved with these models. However, in our latest modeling project, we decided to explore Neural Networks. The results we obtained were highly encouraging, leading us to consider incorporating Neural Network algorithms more frequently in these modeling tasks.

In this experimental modeling project, we are developing two distinct models: The Target Model and the Probability Model.

-

Target Model: The Target Model is designed to predict specific targets for customers. It involves training the model against the target variable, which is actual sales amount. The Target Model aims to generate precise predictions for the sales amount.

-

Probability Model: The Probability Model, on the other hand, is tailored for binary classification tasks. In this case, the model is trained to predict the probability of each class (whether customer will take up the product or not) for all customers. Instead of directly predicting the class labels, the Probability Model assigns probabilities to each class, indicating the likelihood of a customer belonging to a particular category.

Neural Networks

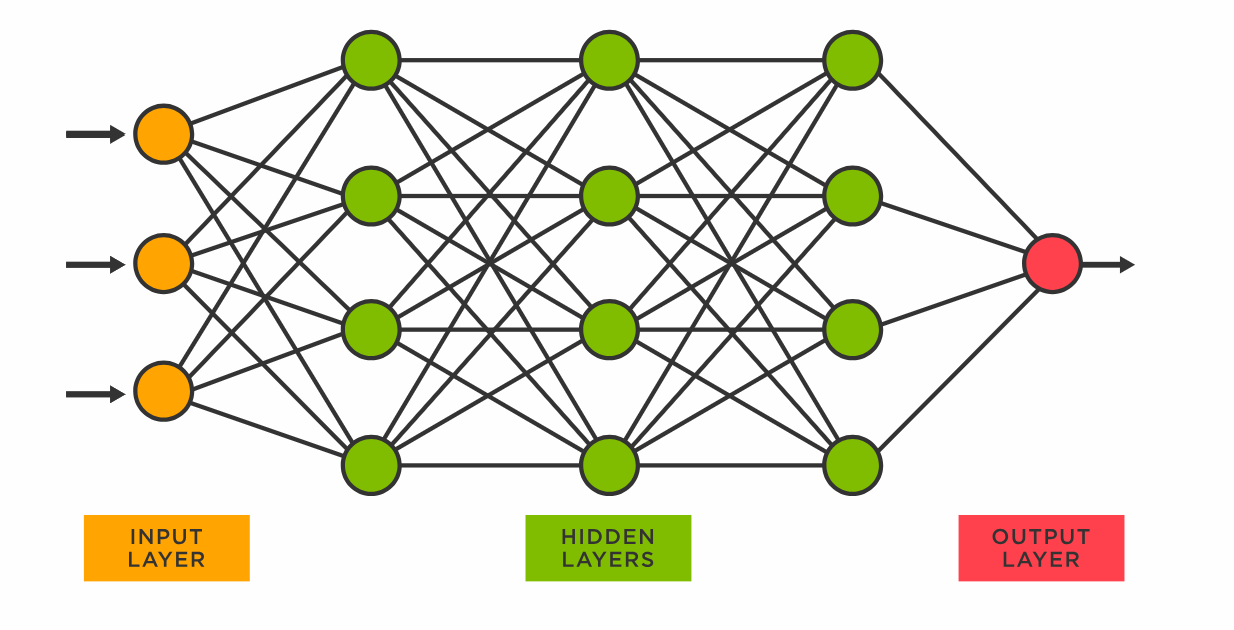

Each of these connections has weights that determine the influence of one unit on another unit. As the data transfers from one unit to another, the neural network learns more and more about the data which eventually results in an output from the output layer.

Fig.1. Neural Network

Multi-Layer Perceptron:

Multi-Layer Perceptrons (MLPs) are an essential and foundational architecture. MLPs are the simplest form of feedforward neural networks and serve as building blocks for more complex deep learning models.

Henceforth, when we mention “Multi-Layer Perceptron,” it simply refers to a neural network.

Neural networks have several advantages that make them well-suited for sales modeling:

-

Non-linear Modeling: Neural networks are highly effective at capturing complex non-linear relationships in the data. Sales data often exhibits intricate patterns and interactions between various factors, and neural networks excel at learning these complex mappings.

-

Feature Learning: Neural networks can automatically learn relevant features from the raw data, reducing the need for manual feature engineering. This capability is beneficial when dealing with high-dimensional and unstructured data, common in sales datasets.

-

Scale and Size: Sales datasets can be large and contain massive amounts of data. Neural networks can efficiently handle big data and can be scaled to accommodate larger models and deeper architectures for more complex tasks.

-

Prediction Accuracy: Neural networks, especially deep learning models with multiple layers, can achieve state-of-the-art prediction accuracy in a wide range of applications, including sales forecasting, customer segmentation, and churn prediction.

-

Adaptability: Sales environments are dynamic and subject to changes over time. Neural networks can be retrained or updated to adapt to evolving patterns and trends in the sales data.

-

Handling Multimodal Data: Sales data often includes diverse information like text, images, and numerical data. Neural networks, with architectures like CNNs and Transformers, can effectively process and integrate information from multiple modalities.

Model building & Performance:

Target Modeling:

We utilized the MLPRegressor class from scikit-learn (sklearn) for its straightforward implementation, smoothly importing it from the sklearn.neural_network module.

Our primary goal was to build an up-selling model, focusing on establishing relationships between various features and target sale amounts. We had a substantial dataset of 120,000 rows, spanning across 108 features. To handle the high dimensionality of the data, we employed SVD decomposition to reduce its dimensionality.

To ensure a fair comparison, we trained three distinct models with roughly equivalent parameters: Random Forest Regressor, XGBoost Regressor, and MLP Regressor. The results of these models are presented in the table below.

| Data size | Model | Time taken | R2 | MAE | ||

| in sec | Train | Test | Trian | Test | ||

|

120000 |

RF | 30 | 0.29 | 0.29 | 30292 | 30000 |

| XGB | 15 | 0.47 | 0.43 | 26305 | 27456 | |

| MLP | 75 | 0.52 | 0.46 | 25338 | 26587 | |

Table 1

We can observe that the MLP is outperforming the traditional models by a significant margin. It is noteworthy that the available data is comparatively limited for neural networks. Despite this limitation, the MLP model is exhibiting superior performance compared to the other two models. This emphasizes the strength and adaptability of the MLP architecture in handling even smaller datasets effectively.

We conducted an additional experiment, this time instead of using all 108 features, the model is provided with only 15 selected features, each having a correlation above 0.1 with our target variable. The outcomes of this experiment are as follows:

| Data size | Model | Time taken | R2 | MAE | ||

| in sec | Train | Test | Trian | Test | ||

|

120000 |

RF | 14 | 0.54 | 0.54 | 25481 | 25671 |

| XGB | 11 | 0.61 | 0.58 | 23000 | 23900 | |

| MLP | 40 | 0.58 | 0.56 | 23933 | 24400 | |

Table 2

In this case as well, the MLP is performing on par with the traditional models. Despite the longer training time required by the MLP, it demonstrates no signs of overfitting and still outperforms the Random Forest Regressor.

This observation allows us to conclude that the MLP Regressor has the advantage of automatically learning relevant features from the raw data, eliminating the need for manual feature engineering. On the other hand, traditional models necessitate manual feature engineering to achieve comparable performance.

The experiment results strongly suggest that the flexibility and feature learning capabilities of the MLP make it a promising choice for modeling tasks, especially when dealing with complex data with many features.

Probability Model:

We also developed a probability model for cross-selling a product to similar customers. The goal was to train a model that predicts the probability of a customer buying a product. The outcomes for the same models are as follows:

| Data size | Model | Time taken | Gini | Sensitivity | Specificity | |

| in sec | Train | Test | ||||

|

900000 |

RF | 331 | 80 | 80 | 53 | 85 |

| XGB | 145 | 81 | 81 | 55 | 70 | |

| MLP | 54 | 81 | 81 | 65 | 90 | |

Table 3

It’s remarkable to find that even the probability model using MLP performs as well as the traditional models. The most surprising aspect is the efficient time taken by the MLP to learn from 9 million data points, only 54 seconds, which is significantly less compared to the tree-based models.

This indicates the impressive scalability and computational efficiency of the MLP architecture when dealing with large datasets. The ability to handle vast amounts of data in a relatively short time makes MLPs an attractive choice for various tasks, including probability modeling and other complex machine learning applications.

Conclusion:

Indeed, neural networks, especially Multi-Layer Perceptrons(MLPs), offer significant advantages in sales modeling. Their ability to outperform traditional models on raw data, combined with the high-dimensionality often present in sales datasets, makes them highly suitable for this domain.

While it is true that neural networks are sometimes considered black boxes due to their lack of explainability, the remarkable performance of MLPs compared to traditional ML models should not be underestimated. The success of MLPs suggests that the trade-off between interpretability and predictive power could be well justified in sales modeling.

For large datasets comprising millions of data points, neural networks exhibit superior scalability and computational efficiency compared to traditional ML algorithms. As a result, when dealing with extensive datasets, neural networks should be our first preference.

Considering these observed advantages, incorporating MLPs into sales modeling can lead to more accurate predictions and deeper insights, despite the inherent complexity of neural networks. Therefore, it is highly beneficial to embrace the power of MLPs in sales modeling, particularly when tackling tasks involving raw data and high-dimensional features.

Note : The performance metrics used in this learning are for target modelling R2 and MAE. And for probability model we used gini, sensitivity and specificity.

Follow