Introduction

Sentiment Analysis (SA) in business, also known as opinion mining (OM) is a process of identifying and cataloging a piece of text according to the tone conveyed by it. The text can be tweets, comments, feedbacks, news or random texts with positive, negative and neutral sentiments associated with them.

Need for Sentiment Analysis

Sentiment analysis can be a means to optimize your marketing strategy by listening to what your customers feel and think about your brand.

Ideas to develop your product quality and how it is presented can only be derived from your target customers’ opinions. One way to do that is by conducting a structured and planned survey. Another method is to get that information from the casual discussions that are going on related to your brand in public social platforms.

Your customers can essentially become micro-influencers for you. That is why it is of utmost importance to have the best customer service in place and keep your current customers happy.

There are many factors that contribute to great customer service, such as on-time delivery, being responsive in social media, and adequate compensation for product’s errors. Sentiment analysis can pick up negative discussions, and give you real-time alerts so that you can respond quickly. If customers complain about something related to your brand, the faster you react, the more likely customers will forget being annoyed in the first place, and be satisfied with the great customer service.

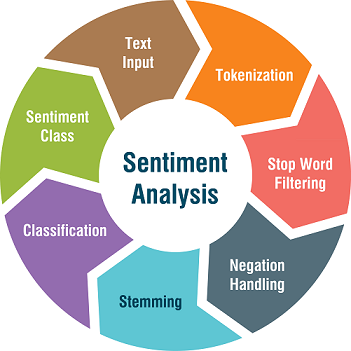

Process Overview of Sentiment analysis:

Python’s NLTK is mostly used for most of the tasks of below. You can read its comprehensive documentation at http://www.nltk.org/book/

- Understand the data

The primary step before embarking on the journey with your data is to understand it. In this case, calculating the number of uppercase words, hashtags and stopwords and noting them in a python dictionary would be helpful. Logic applied could include: Anger is expressed in text using words written in uppercase, hashtags in social media texts can be helpful in giving extra information, stopwords will be removed in preprocessing step and hence we need to understand how much information we might lose.

- Preprocessing the data

The step involves spelling correction using textblob library (doc- http://textblob.readthedocs.io/en/dev/), removal of stop words which carry least information like ‘she’, ‘into’, ‘other’, ‘most’ converting to lowercase, tokenization of text to a sequence of words and stemming which is the removal of –ly, -ing from words to its base form using PorterStemmer among many others.

- Training and classifying

After checking the training data, you may find that it’s imbalanced. If it is, then using imblearn’s many sampling techniques would be helpful. You can oversample the data using SMOTE or undersample it using NearMiss. Basically, it’s a matter of trials and tweakings and then selecting which one gives the best F1 score.

Prospective models like Naïve Bayes Classifier, SVM, RandomForest, XGBoost can be tried and validated before selection of an appropriate one using a range of metrics like precision and recall, AUC-ROC scores, accuracies etc.

Follow